Most Commented

Mastering Machine Learning: From Basics To Breakthroughs

Description material

Mastering Machine Learning: From Basics To Breakthroughs

Published 9/2024

MP4 | Video: h264, 1920x1080 | Audio: AAC, 44.1 KHz

Language: English | Size: 918.11 MB | Duration: 3h 38m

Machine Learning, Supervised Learning, Unsupervised Learning, Regression, Classification, Clustering, Markov Models

What you'll learn

Explore the fundamental mathematical concepts of machine learning algorithms

Apply linear machine learning models to perform regression and classification

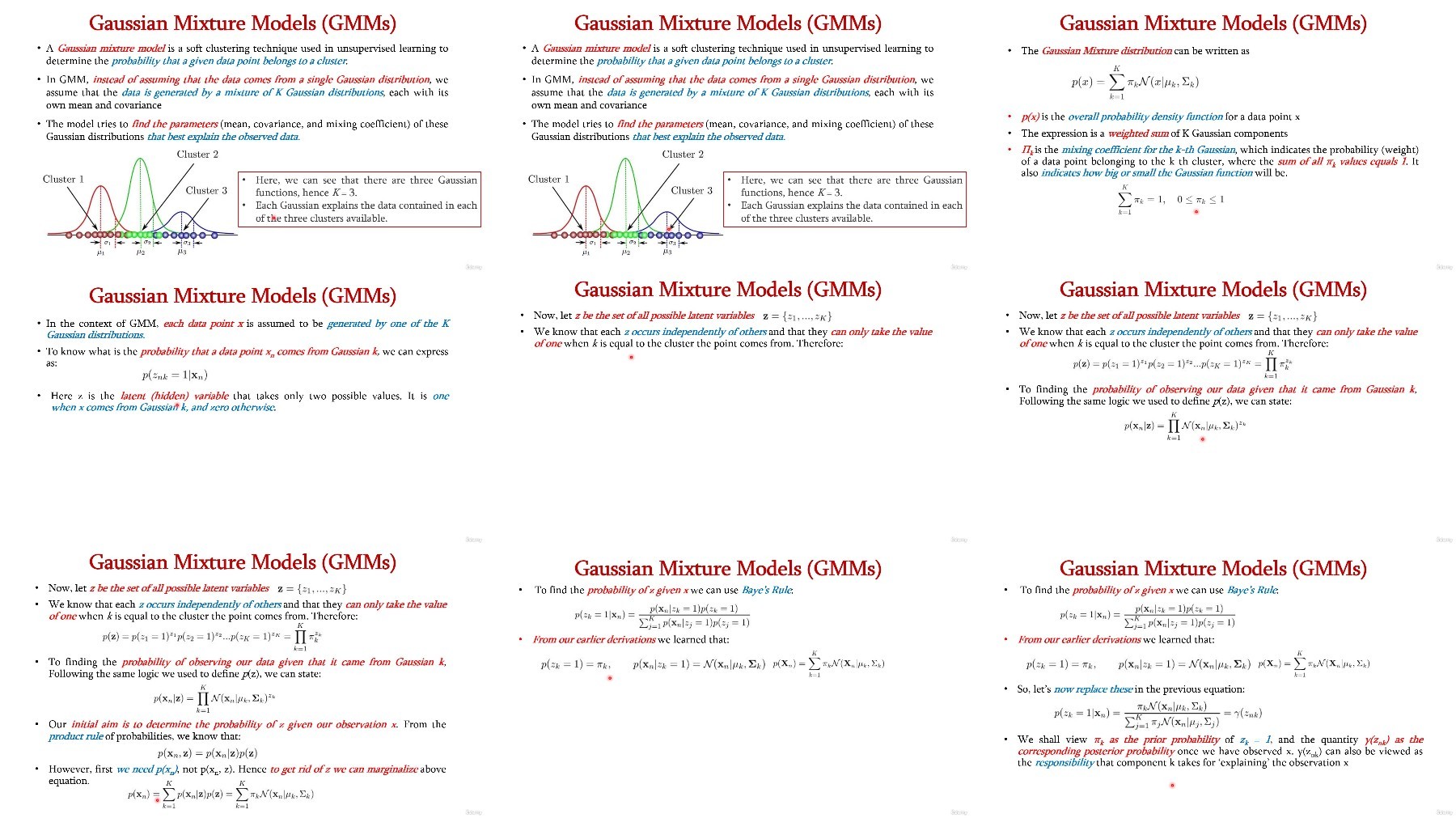

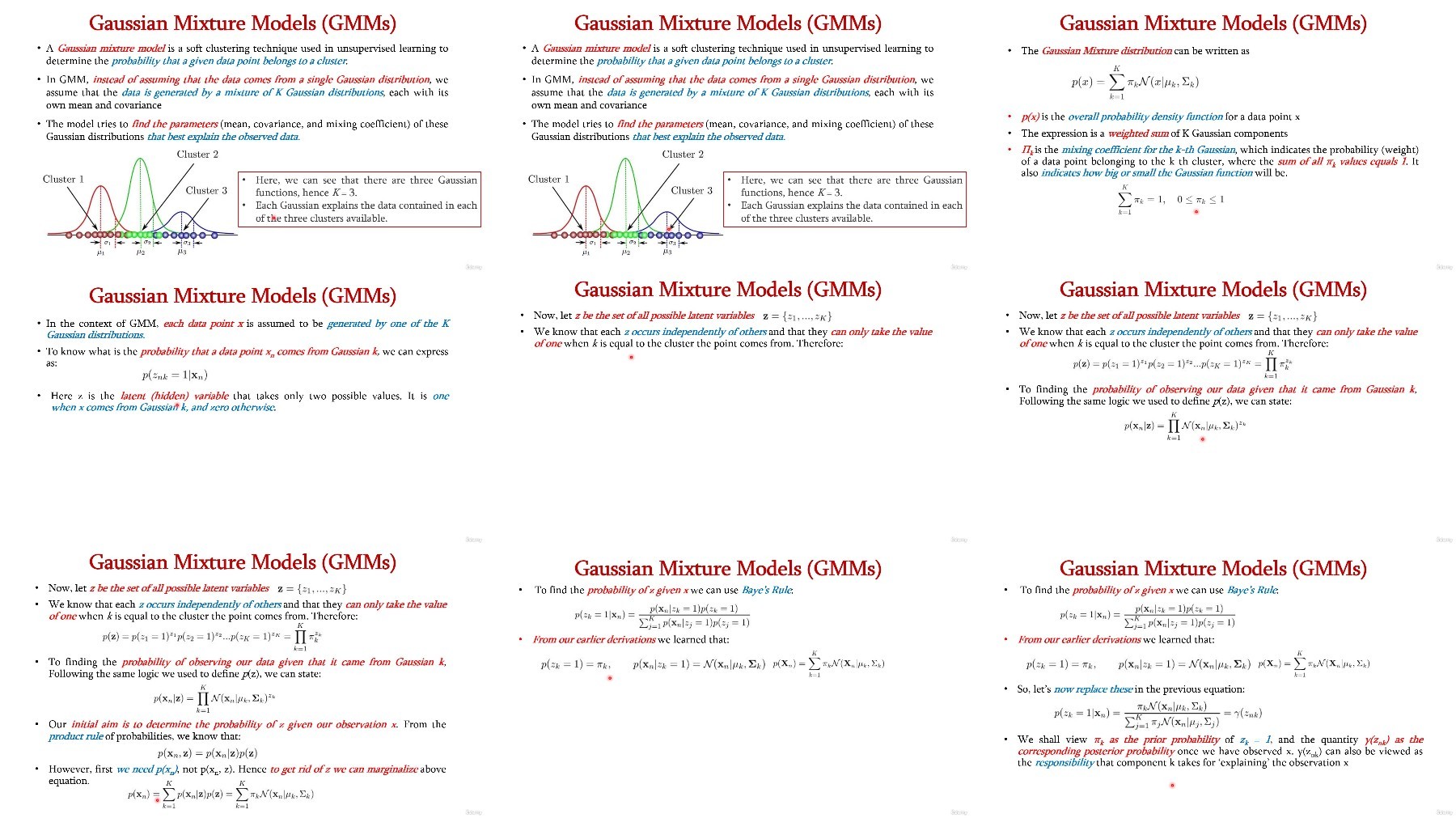

Utilize mixture models to group similar data items

Develop machine learning models for time-series data prediction

Design ensemble learning models using various machine learning algorithms

Requirements

Foundations of Mathematics and Algorithms

Description

This Machine Learning course offers a comprehensive introduction to the core concepts, algorithms, and techniques that form the foundation of modern machine learning. Designed to focus on theory rather than hands-on coding, the course covers essential topics such as supervised and unsupervised learning, regression, classification, clustering, and dimensionality reduction. Learners will explore how these algorithms work and gain a deep understanding of their applications across various domains.The course emphasizes theoretical knowledge, providing a solid grounding in critical concepts such as model evaluation, bias-variance trade-offs, overfitting, underfitting, and regularization. Additionally, it covers essential mathematical foundations like linear algebra, probability, statistics, and optimization techniques, ensuring learners are equipped to grasp the inner workings of machine learning models.Ideal for students, professionals, and enthusiasts with a basic understanding of mathematics and programming, this course is tailored for those looking to develop a strong conceptual understanding of machine learning without engaging in hands-on implementation. It serves as an excellent foundation for future learning and practical applications, enabling learners to assess model performance, interpret results, and understand the theoretical basis of machine learning solutions.By the end of the course, participants will be well-prepared to dive deeper into machine learning or apply their knowledge in data-driven fields, without requiring programming or software usage.

Overview

Section 1: Introduction

Lecture 1 Introduction to Machine Learning

Lecture 2 Types of Machine Learning

Lecture 3 Polynomial Curve Fitting

Lecture 4 Probability

Lecture 5 Total Probability, Bayes Rule and Conditional Independence

Lecture 6 Random Variables and Probability Distribution

Lecture 7 Expectation, Variance, Covariance and Quantiles

Section 2: Linear Models for Regression

Lecture 8 Maximum Likelihood Estimation

Lecture 9 Least Squares Method

Lecture 10 Robust Regression

Lecture 11 Ridge Regression

Lecture 12 Bayesian Linear Regression

Lecture 13 Linear models for classification::Discriminant Functions

Lecture 14 Probabilistic Discriminative and Generative Models

Lecture 15 Logistic Regression

Lecture 16 Bayesian Logistic Regression

Lecture 17 Kernel Functions

Lecture 18 Kernel Trick

Lecture 19 Support Vector Machine

Section 3: Mixture Models and EM

Lecture 20 K-means clustering

Lecture 21 Mixtures of Gaussians

Lecture 22 EM for Gaussian Mixture Models

Lecture 23 PCA, Choosing the number of latent dimensions

Lecture 24 Hierarchial clustering

Students, data scientists and engineers seeking to solve data-driven problems through predictive modeling

What you'll learn

Explore the fundamental mathematical concepts of machine learning algorithms

Apply linear machine learning models to perform regression and classification

Utilize mixture models to group similar data items

Develop machine learning models for time-series data prediction

Design ensemble learning models using various machine learning algorithms

Requirements

Foundations of Mathematics and Algorithms

Description

This Machine Learning course offers a comprehensive introduction to the core concepts, algorithms, and techniques that form the foundation of modern machine learning. Designed to focus on theory rather than hands-on coding, the course covers essential topics such as supervised and unsupervised learning, regression, classification, clustering, and dimensionality reduction. Learners will explore how these algorithms work and gain a deep understanding of their applications across various domains.The course emphasizes theoretical knowledge, providing a solid grounding in critical concepts such as model evaluation, bias-variance trade-offs, overfitting, underfitting, and regularization. Additionally, it covers essential mathematical foundations like linear algebra, probability, statistics, and optimization techniques, ensuring learners are equipped to grasp the inner workings of machine learning models.Ideal for students, professionals, and enthusiasts with a basic understanding of mathematics and programming, this course is tailored for those looking to develop a strong conceptual understanding of machine learning without engaging in hands-on implementation. It serves as an excellent foundation for future learning and practical applications, enabling learners to assess model performance, interpret results, and understand the theoretical basis of machine learning solutions.By the end of the course, participants will be well-prepared to dive deeper into machine learning or apply their knowledge in data-driven fields, without requiring programming or software usage.

Overview

Section 1: Introduction

Lecture 1 Introduction to Machine Learning

Lecture 2 Types of Machine Learning

Lecture 3 Polynomial Curve Fitting

Lecture 4 Probability

Lecture 5 Total Probability, Bayes Rule and Conditional Independence

Lecture 6 Random Variables and Probability Distribution

Lecture 7 Expectation, Variance, Covariance and Quantiles

Section 2: Linear Models for Regression

Lecture 8 Maximum Likelihood Estimation

Lecture 9 Least Squares Method

Lecture 10 Robust Regression

Lecture 11 Ridge Regression

Lecture 12 Bayesian Linear Regression

Lecture 13 Linear models for classification::Discriminant Functions

Lecture 14 Probabilistic Discriminative and Generative Models

Lecture 15 Logistic Regression

Lecture 16 Bayesian Logistic Regression

Lecture 17 Kernel Functions

Lecture 18 Kernel Trick

Lecture 19 Support Vector Machine

Section 3: Mixture Models and EM

Lecture 20 K-means clustering

Lecture 21 Mixtures of Gaussians

Lecture 22 EM for Gaussian Mixture Models

Lecture 23 PCA, Choosing the number of latent dimensions

Lecture 24 Hierarchial clustering

Students, data scientists and engineers seeking to solve data-driven problems through predictive modeling

Warning! You are not allowed to view this text.

Warning! You are not allowed to view this text.

Warning! You are not allowed to view this text.

Join to our telegram Group

Information

Users of Guests are not allowed to comment this publication.

Users of Guests are not allowed to comment this publication.

Choose Site Language

Recommended news

Commented

![eM Client Pro 9.2.1735 Multilingual [Updated]](https://pikky.net/medium/wXgc.png)

![Movavi Video Editor 24.0.2.0 Multilingual [ Updated]](https://pikky.net/medium/qhrc.png)