Most Commented

Coursera - Generative AI Language Modeling with Transformers

Description material

189.12 MB | 00:08:20 | mp4 | 1280X720 | 16:9

Genre:eLearning |Language:English

Files Included :

01 course-introduction (4.41 MB)

01 positional-encoding (11.16 MB)

02 attention-mechanism (11.99 MB)

03 self-attention-mechanism (11.99 MB)

04 from-attention-to-transformers (11.07 MB)

05 transformers-for-classification-encoder (14.87 MB)

01 language-modeling-with-the-decoders-and-gpt-like-models (11.12 MB)

02 training-decoder-models (11.29 MB)

03 decoder-models-pytorch-implementation-causal-lm (13 MB)

04 decoder-models-pytorch-implementation-using-training-and-inference (10.61 MB)

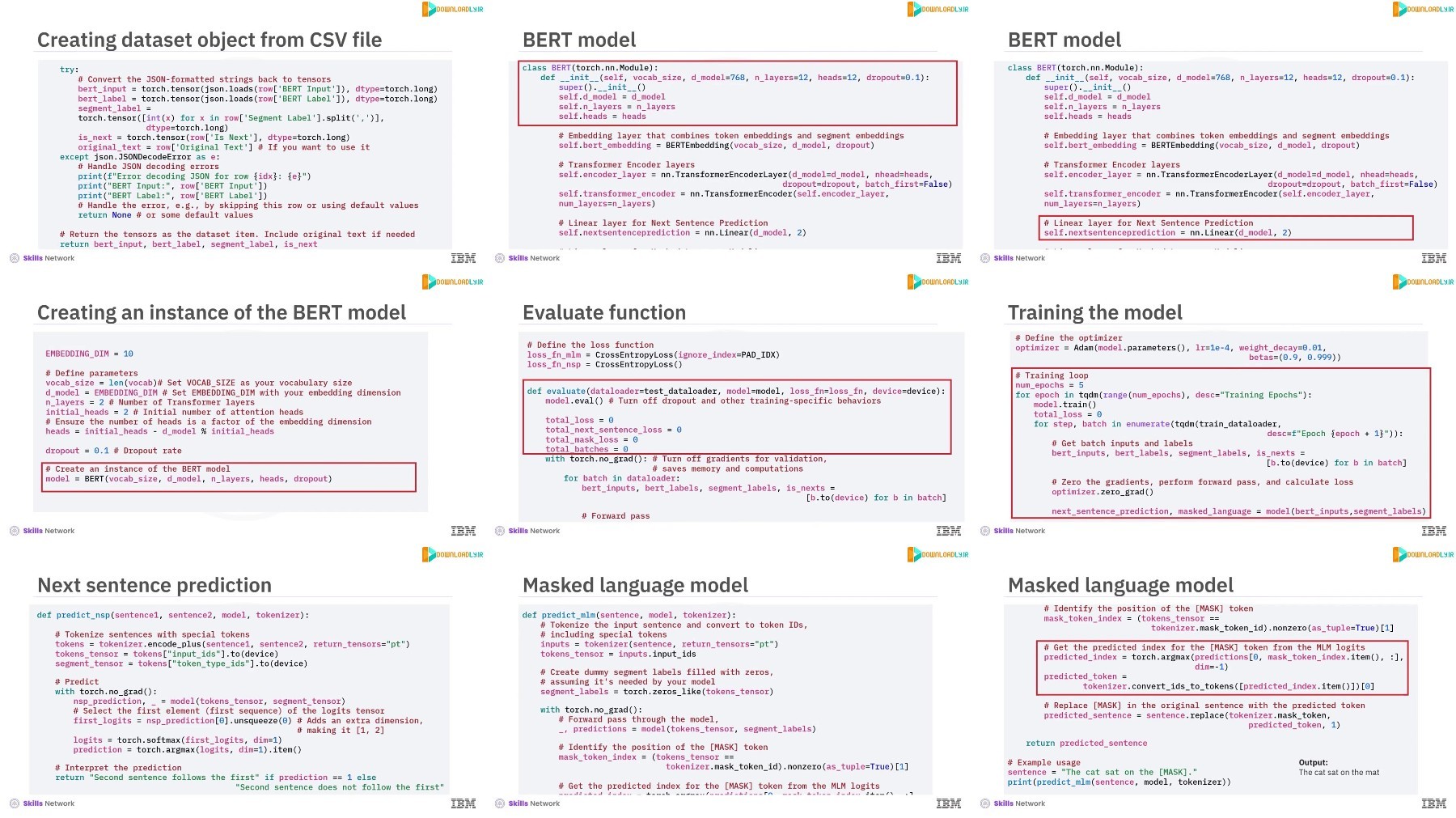

01 encoder-models-with-bert-pretraining-using-mlm (8.81 MB)

02 encoder-models-with-bert-pretraining-using-nsp (9.91 MB)

03 data-preparation-for-bert-with-pytorch (17.25 MB)

04 pretraining-bert-models-with-pytorch (17.99 MB)

01 transformer-architecture-for-language-translation (7.87 MB)

02 transformer-architecture-for-translation-pytorch-implementation (15.56 MB)

[center]

Screenshot

[/center]

RapidGator

Warning! You are not allowed to view this text.

FileAxa

Warning! You are not allowed to view this text.

TurboBit

Warning! You are not allowed to view this text.

Join to our telegram Group

Information

Users of Guests are not allowed to comment this publication.

Users of Guests are not allowed to comment this publication.

Choose Site Language

Recommended news

Commented

![eM Client Pro 9.2.1735 Multilingual [Updated]](https://pikky.net/medium/wXgc.png)

![Movavi Video Editor 24.0.2.0 Multilingual [ Updated]](https://pikky.net/medium/qhrc.png)