Most Commented

Fine-Tuning LLMs for Cybersecurity: Mistral, Llama, AutoTrain, and AutoGen

Description material

Fine-Tuning LLMs for Cybersecurity: Mistral, Llama, AutoTrain, and AutoGen

.MP4, AVC, 1280x720, 30 fps | English, AAC, 2 Ch | 2h 52m | 376 MB

Instructor: Akhil Sharma

Explore the emergent field of cybersecurity enhanced by large language models (LLMs) in this detailed and interactive course. Instructor Akhil Sharma starts with the basics, including the world of open-source LLMs, their architecture and importance, and how they differ from closed-source models.

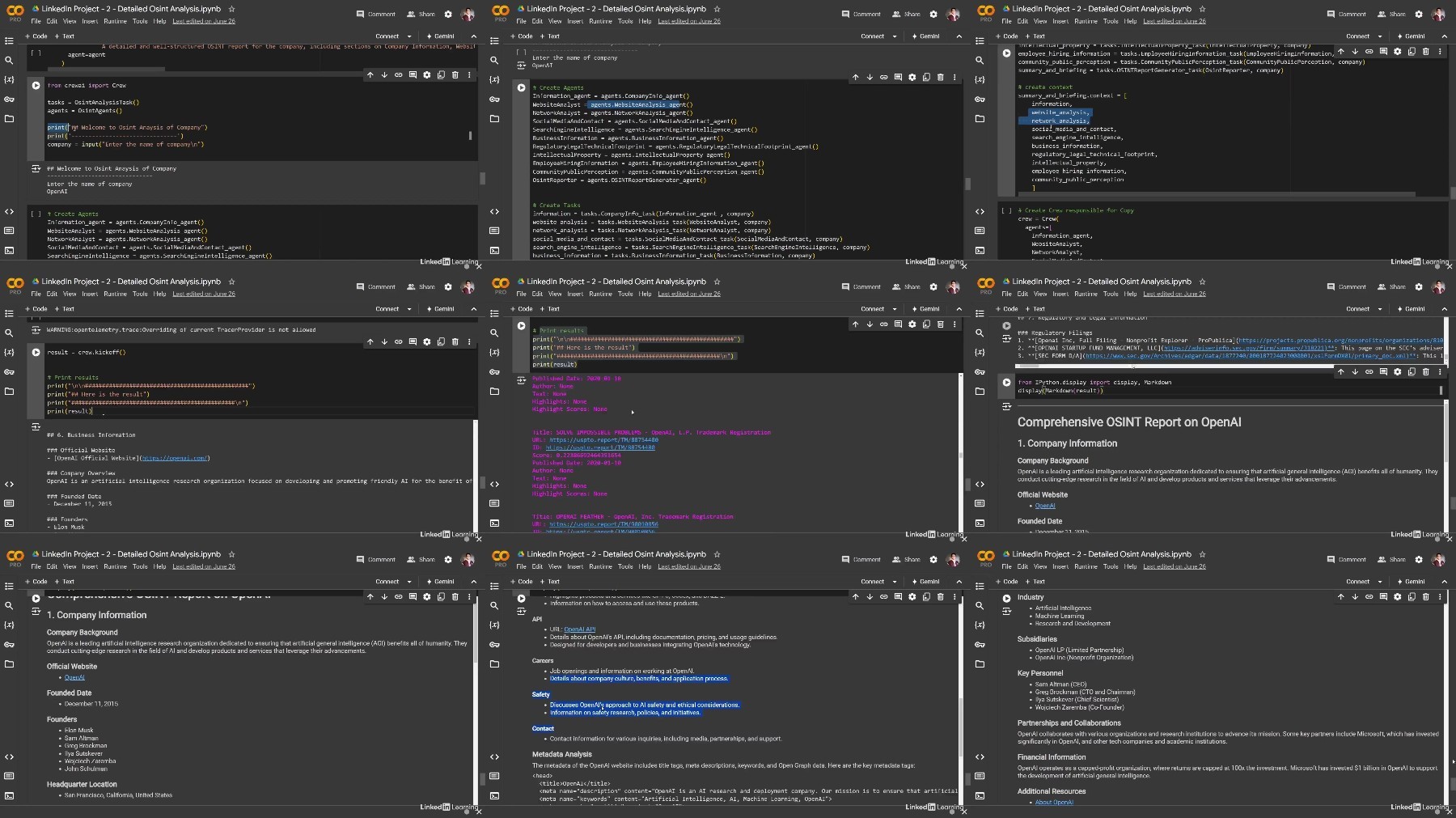

Learn how to run and fine-tune models to tackle cybersecurity challenges more effectively. Gather insights for identifying new threats, generating synthetic data, performing open-source intelligence (OSINT), and scanning code vulnerabilities with hands-on examples and guided challenges. Perfect for cybersecurity professionals, IT specialists, and anyone keen on understanding how AI can bolster security protocols, this course prepares you to embrace the synergy of AI for cybersecurity, unlocking new potentials in threat detection, prevention, and response.

Learning objectives

- Explain the fundamental concepts and architectures of open-source large language models (LLMs) such as GPT and transformer models.

- Analyze the potential applications of LLMs in enhancing cybersecurity measures, including threat detection, penetration testing, and phishing defense.

- Develop practical skills in integrating LLM-powered systems into existing cybersecurity protocols and infrastructures.

- Construct and fine-tune LLMs tailored for specific cybersecurity applications, such as automated penetration testing or advanced phishing detection.

- Build LLM agent workflows for security tooling such as web vulnerability scanning and OSINT.

- Evaluate the effectiveness of AI-driven cybersecurity solutions and identify areas for optimization and improvement to adapt to evolving cyber threats.

Exercise Files on GitHub

More Info

FileAxa

Warning! You are not allowed to view this text.

RapidGator

Warning! You are not allowed to view this text.

FileStore

TurboBit

Warning! You are not allowed to view this text.

Join to our telegram Group

Information

Users of Guests are not allowed to comment this publication.

Users of Guests are not allowed to comment this publication.

Choose Site Language

Recommended news

Commented

![eM Client Pro 9.2.1735 Multilingual [Updated]](https://pikky.net/medium/wXgc.png)

![Movavi Video Editor 24.0.2.0 Multilingual [ Updated]](https://pikky.net/medium/qhrc.png)